In school, I worked with Ben Spiegel in Professor George Konidaris' Intelligent Robot Lab, a part of Brown's Integrative, General Artificial Intelligence Group (bigAI).

Our draft paper, called "From Icons to Symbols: Simulating the Evolution of Ideographic Languages in a Multi-Agent Reinforcement Setting" built agents who could not only build language but signify concepts. To quote the work:

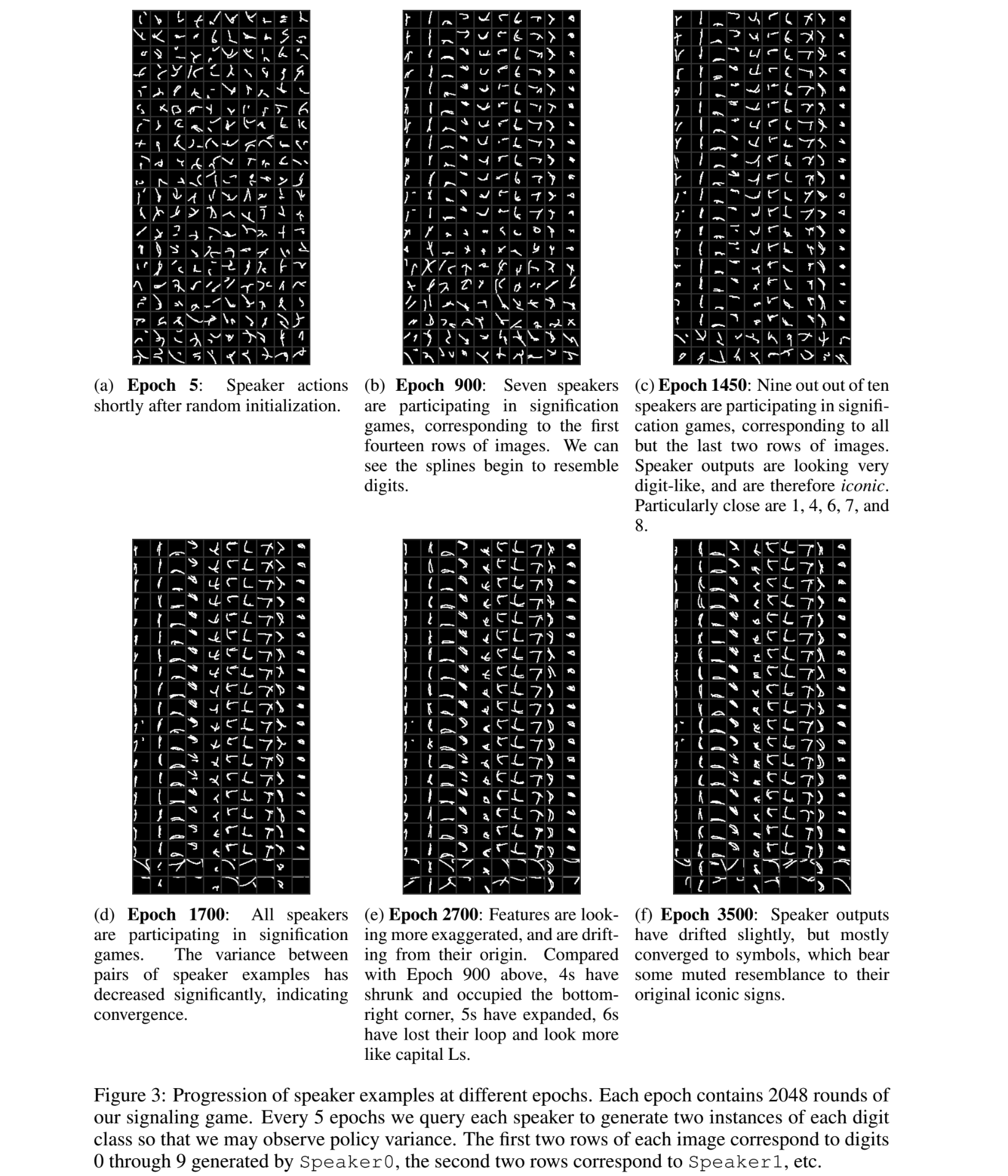

We explore this line of thinking by designing experiments to investigate the emergence of signification, the formation of arbitrary sign-referent connections, in a novel multi-agent reinforcement learning setting called the Simplified Signification Game. Inspired by the evolution of ideographic languages such as Chinese, we design experiments that offer a plausible computational account for both the emergence of basic signification as an act of manipulation, and for the evolution of iconic signs into symbols, which do not resemble their referents.

To study this, we built a setting in JAX with 'speaker' and 'listener' nodes. I was the sole engineer in addition to Ben (who led the project), contributing engineering across the codebase. Some examples: optimizing functions for JAX's just-in-time compilation, running profiling/performance benching, and logging our result images to Weights and Biases. I also worked pretty heavily on our paper submission, writing several of our sections, authoring graphics, finding and summarizing references, and proofreading our drafts.

We put forth both:

a plausible account for the emergence of signification using visual signs and (2) the hypothesis that some visual symbols are the remnants of icons that have collapsed and drifted with repeated use over time. Underlying our main hypotheses is that symbols are not unique to humans, nor the animal kingdom, but are merely a consequence of repeated processes of signification between reward-maximizing learning agents.

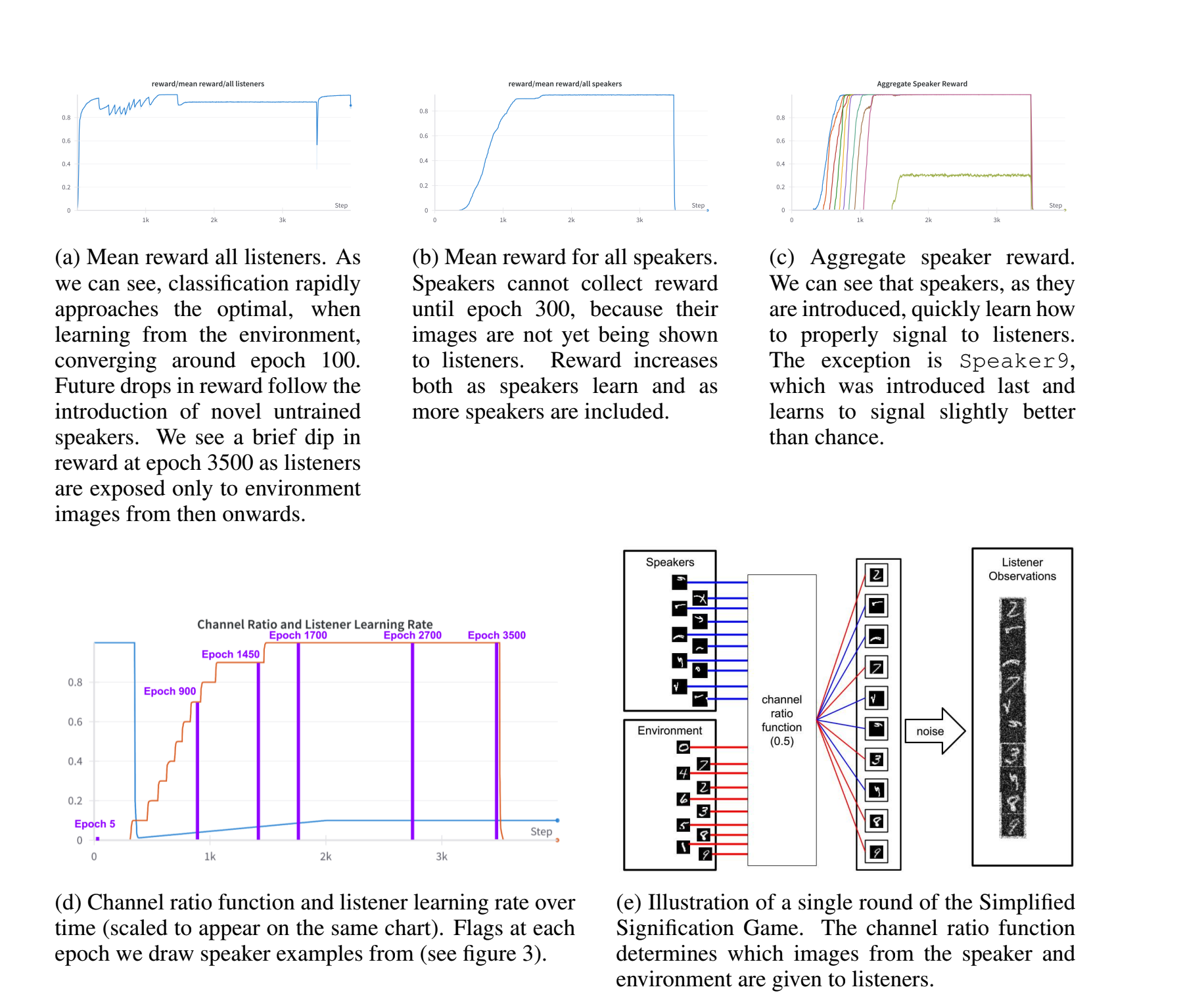

We did this by training 'listener agents' to recognize MNIST digits, being positively rewarded for correct classification. Over time, we swapped in MNIST digits from the 'environment' with canvases 'drawn' by speaker nodes. These canvases were simply a combination of visual splines, the parameters of which were chosen by speaker agents. The speakers would also be rewarded if the listener made the correct classification based on the 'image' we showed. Over time, we'd introduce more and more speaker images, and each speaker would (by reward maximization) learn to signify.

This page is a summary of a much longer work; email me and I'm happy to send over more! Our general interest was in exploring the broader field of 'computational semiotics,' a relatively new field. I came to the project largely on excitement about this from one of Ben's first emails: "this research has deep ties to Semiotics and this first paper idea was directly inspired by passages in Umberto Eco’s A Theory of Semiotics (I know, it may sound crazy)."

I was quite pleased by our results, some of which I've included below:

I did this work as part of Prof. Konidaris' "Reintegrating AI," a grad-level machine learning class. The whole concept is that, when “artificial intelligence” was coined at a famed Dartmouth conference in the 1950s, it imagined an “integrated” artificial intelligence that functioned with human limitations—learning and acting from the physical world, like humans. The field splintered, and different researchers took on different tasks in narrow domains: getting better at robotics, or at object detection, or other small tasks. The course is invested in new calls for the “reintegration” of the discipline, back toward holistic models of intelligence. In essence, many robotic applications that use reinforcement learning and learn from the world are far closer to this conceptual frame of intelligence. It's a really rich path of inquiry, and a nice other perspective on some of the recent enthusiasm about deep learning.